You are here: CLASSE Wiki>HEP/SWIG Web>CmsDbs>DbsRequirements>DbsUseCases>DbsUsageNarratives (19 Jul 2006, AndrewDolgert)Edit Attach

DBS Requirements

DBS Requirements

Usage Narratives and Use Cases for People Interacting with Data Management

The usage narratives cover human interaction with the system. Physicists are the main actor of most of the usage narratives, which are organized according to the typical sequence of events: discover datasets, submit a job, debug, evaluate results, and share them. The use cases describe applications interacting with each other and with the DBS.Contents

- Usage Narratives and Use Cases for People Interacting with Data Management

- Usage Narratives for People Interacting with Data Management

- Find a Dataset Useful for Higgs Research

- Request A Type of Dataset to be Delivered

- Manager Maintains Data Transfer for Physics Collaboration

- Physicist Offers Data to Off-site Collaborator

- Run Analysis as Many Jobs And Combine Results

- Analyze a Physics Event Whose Signature Does Not Correspond Exactly To One Trigger Stream

- Rerun a Previous Analysis with New Software

- Continue a Previous Analysis on New Data

- Generate Signal Monte Carlo Whose Processing Matches A Given Dataset

- Generate Signal MC to Test for Rare Events

- Debugging an Analysis

- Check Results of a Running Analysis

- Mark a Block of Runs or Events as Bad

- Compare Why Two Analyses Had Different Results

- Publish a Dataset to Global Scope

- Recover Failed DBS

- Troubleshoot Problems with Detector Operation Using Analysis Output

- Validate Reconstruction Production is Working Well

- Usage Narratives for People Interacting with Data Management

- Use Cases for Data Management Applications Interacting With DBS

-

- Prodagent Runs an Analysis Whose Result is Not Going to Global Scope

- Prodagent Accepts Several Datasets as Input to an Analysis with a Single Output Dataset

- Prodagent Configures Monte Carlo Generation to Match Given Data Dataset

- Provenance Tool Assembles Metadata on the Processing of a Dataset

- Provenance Tool Publishes a Dataset to Local Scope

- Provenance Tool Compares the Processing of Two Datasets

- Phedex Transfers Dataset Files for Tier 2 Production

- Phedex Keeps a Dataset Updated with Newly-Produced File Blocks

- Workflow Tool Runs Prodagent

- Prodagent Runs Tier 0 Production of Monte Carlo and RECO

-

Usage Narratives for People Interacting with Data Management

Find a Dataset Useful for Higgs Research

A physicist new to using CMS sits down at his desk, opens the wiki, and finds a web application to browse CMS datasets. He is happy to browse text descriptions of the primary datasets, but then he wants to pursue what is available for a particular primary dataset, including how much data and Monte Carlo is produced for that primary dataset and how much data and Monte Carlo the collaboration plan to make. The tool identifies which Tier 2 handles this primary dataset because the physicist might have permission to run analyses only at certain facilities. Each data dataset produced by Tier 0 production for this primary dataset is associated with particular detector conditions, and the tool shows those. It also describes the parameters for Monte Carlo datasets in the primary dataset, identifying them as generic, particular signal MC, or particular background. There may be published datasets that result from further processing on the production datasets. The tool presents these, allowing the physicist to drill-down for more information on them. There is a searchable list of all datasets and a tree view where root nodes represent earlier processing. The physicist browses these, noting which people have published relevant datasets. He sends email to one he knows. He chooses to run his analysis at a Tier 2 where his favorite primary dataset is local and starts preparing the job. The tool gives him an identifier for the primary dataset, and he can tell Prodagent to process a run range, event range, or all events.Request A Type of Dataset to be Delivered

A physicist at a Tier 2 collaboration knows what kind of physics analyses they would like to do. For some early work, they want to look at the AOD data. While it is usually best to move the computation to the data, this physicist wants the data local. He looks through the primary trigger descriptions to find which kinds of data are relevant to their physics. Choosing one of these primary datasets, they look for AOD datasets in this primary dataset. They choose one or more of these AOD datasets and notify Phedex that they want them sent to their local system. Phedex estimates when that data will arrive and be ready to process.Manager Maintains Data Transfer for Physics Collaboration

For someone managing Tier 2 data operations, they know that they will be responsible for processing a subset of the primary datasets. They tell Phedex that they want all data from those datasets. Phedex updates the available data daily, downloading more as it becomes available. The manager at Tier 2 can see what data is coming so that they can make sure there is space for it.Physicist Offers Data to Off-site Collaborator

When collaborating remotely, two physicists may exchange datasets that they have processed with their own analysis. In order to do this, one person must give the other permission to get that dataset. The other asks Phedex to retrieve it. The only way to publish a dataset is to make it available on the global scope. A collaboration which does not yet want to make data globally available may want to share that data with a remote collaborator.Run Analysis as Many Jobs And Combine Results

There is an analysis code that takes a while to process only a few events. A physicist runs this on the Grid in several separate runs and then wants to treat the results as a single dataset. Prodagent, which runs the jobs, tracks the history of all of them with the help of the DBS. When the physicist wants to combine the datasets, he specifies all of them as input to the next job. The job's output is a single dataset.Analyze a Physics Event Whose Signature Does Not Correspond Exactly To One Trigger Stream

This experiment is such a target-rich environment that it throws away lots of events. If physicist Denny is looking for a physics event which may be in several triggers, he has to pay close attention to the selection criteria of each trigger. Depending on which trigger you get, it tells you how many events you lost. For instance, you may want to examine events that have more than twelve tracks, but a particular trigger stream has all events with more than thirteen tracks, so you have to account for events with exactly twelve tracks from one or more other trigger streams. If you mistakenly forget to pick a trigger line that includes your events, then the efficiencies will be wrong, so the physics results will be wrong. Denny would like a fast way to analyze the particular physics events he cares about. He wants to find candidate events with a series of analysis steps. The first step is a rough cut, such as how many tracks exist or whether there is a high pt muon. The next steps are more involved analysis. He would like to be able to do the rough cut quickly by jumping around in event collections somehow. You could put a summary “thumbnail” on each event which holds tags describing basic physics information. You could create an index file for a particular physics event that points to individual events in datasets. You could create a skim, which is a new dataset containing copies of events from other datasets. CMS seems to be using skims and, maybe, TAGS files.Rerun a Previous Analysis with New Software

Continue a Previous Analysis on New Data

Last week, physicist Martha did an analysis run of a sample, and it turned out well. In that sample were events corresponding to a physics process relevant to her research, and those events had been culled from a particular trigger stream. This week, there are more events in that sample, so she wants to analyze those new events. She looks at what she analyzed before, identifying that set of events either by the date of her analysis, by which datasets were in the sample at that time, or by which events were included in the job that she ran (doesn't matter). Then she specifies that the new job should run against the rest of the events in the sample.Generate Signal Monte Carlo Whose Processing Matches A Given Dataset

A physicist wants to analyze a data dataset by running Monte Carlo that matches that dataset. The physicist knows which dataset to analyze. He specifies that particular AOD dataset and asks for a certain luminosity of Monte Carlo to be created and processed to produce AOD Monte Carlo.Generate Signal MC to Test for Rare Events

Peter wants to test his code to see how well it finds XXX event, but that event happens only once for every billion events in the generic MC, so he uses detector conditions (and what else?) for XXX to build a dataset with many more XXX events than usual.Debugging an Analysis

Automatic log checkers Job report -#events, output, exceptions, files open, files written, branches (data objects written) If the job is failing, it is easy to remember to keep logs. If the job ran with weird behavior, may notice months later. Must have breadcrumbs. Capture more debugging information for physicist in standardized way? What filters did you choose? There can be more than one module per configuration.Check Results of a Running Analysis

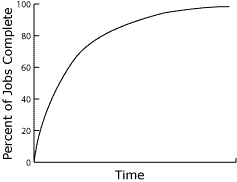

Physicist Genya is waiting for his job to complete. He sees that a lot of the analysis is complete and data is available. His data is taking a long time to return. Typical GRID jobs will return most data quickly but require much longer times to handle failed jobs, as shown in the heuristic graph. He looks at the existing results by hand, meaning that he logs into a machine that has the results and interactively runs a short analysis against the file. Then he wants to run a SW Framework analysis against what parts of the first analysis are complete. He constructs a new analysis specifying the currently available results as input and submits that job to run.

He looks at the existing results by hand, meaning that he logs into a machine that has the results and interactively runs a short analysis against the file. Then he wants to run a SW Framework analysis against what parts of the first analysis are complete. He constructs a new analysis specifying the currently available results as input and submits that job to run.

Mark a Block of Runs or Events as Bad

Even though events passed validation at the detector and reconstruction, it is possible for them to have a problem that precludes using them. It is possible that the reconstruction was good but that an analysis step later had a problem. For instance, there may be a cluster node with a bad FPU. A physicist tells the data manager that there is a problem. Instead of rerunning the analysis, a data manager wants to exclude certain events in such a way that physicists doing analysis will not accidentally get the bad events when they specify this sample. At the same time, the data manager may not yet be prepared to remove those events from the dataset, possibly because having the bad events around could be crucial to understanding imperfections in earlier analysis.Compare Why Two Analyses Had Different Results

Two scientists are looking for the same type of physics event. Given the same starting base reconstruction, one got sixteen events, and the other got three. They want to find what was different between the two analyses, so they look to see as much as they can about the processing each one did. Which datasets did they use? Which versions of processing did they use along the way? During processing, some subset of the parameters were relevant to the physics of the results. Were those parameters the same, or how were they different? (Elaborate on what parameters to compare.) One way to compare analyses is to run the three-event analysis on the sixteen events from the other analysis.Publish a Dataset to Global Scope

Got RECO. Run own analysis. Make new skim and publish back to DBS. How do you take a single file and feed it back to the DBS? Need a tool to publish a file to the DBS.Recover Failed DBS

If the DBS machine fails, is there failover to take its place? How do you reload a DBS? If the DBS information for a dataset were completely lost, what kind of information exists to reconstruct its provenance? How could you insert such provenance into the DBS?Troubleshoot Problems with Detector Operation Using Analysis Output

At Compute Tier 0, tracking efficiency looks like it fell off in the HLT. Maybe output of the prompt reconstruction will be clearer about whether there is a problem or not, so a detector expert wants to look at prompt reconstruction data. The analysis program could be written in SW Framework or in ROOT. Even though the prompt reconstruction has not yet processed all the data that will become the next reconstruction dataset, the detector expert wants access to the data that has been processed. It may be that it is someone's job to check the first events that the prompt reconstruction produces. The detector expert may specify a data range by specifying that they want the reconstruction for a set of events within a run, but they will need some earlier knowledge of which events are being written at a particular time.Validate Reconstruction Production is Working Well

A reconstruction expert wants to make sure that the reconstruction is going well, so they check the first few events of the reconstruction at regular time intervals. The reconstruction job is still in process, and there is not yet a complete dataset. The reconstruction expert wants to run a SW Framework or ROOT job against the first events. If the reconstruction is not working well, this expert has to stop reconstruction, ensure resulting data is not used, and then either restart processing of RAW data or contact the detector expert to troubleshoot.Use Cases for Data Management Applications Interacting With DBS

Prodagent Runs an Analysis Whose Result is Not Going to Global Scope

| Name: | 2. Prodagent Runs an Analysis Whose Result is Not Going to Global Scope |

| Actors: | Prodagent, global DBS, local DBS, global DLS |

| Goal Level: | User goal |

| Scope: | Service scope |

| Description: | Prodagent receives configuration files describing a Monte Carlo job. It examines the input dataset and figures out what resources to use. It then runs the job and gathers results. |

| Revision: | Filled |

| Trigger: | Production manager submits a job, including a description of computational resources, simulation and reconstruction configuration templates, and what dataset to analyze, specified by a unique dataset name and set of event collections within that dataset. |

| Primary Scenario: |

|

| Exceptions: |

Prodagent Accepts Several Datasets as Input to an Analysis with a Single Output Dataset

Prodagent Configures Monte Carlo Generation to Match Given Data Dataset

Provenance Tool Assembles Metadata on the Processing of a Dataset

Provenance Tool Publishes a Dataset to Local Scope

Provenance Tool Compares the Processing of Two Datasets

Phedex Transfers Dataset Files for Tier 2 Production

Phedex Keeps a Dataset Updated with Newly-Produced File Blocks

Workflow Tool Runs Prodagent

Prodagent Runs Tier 0 Production of Monte Carlo and RECO

-- AndrewDolgert - 27 May 2006- Set USERSTYLEURL = https://wiki.classe.cornell.edu/pub/HEP/SWIG/DbsUseCases/UseCases.css

Edit | Attach | Print version | History: r4 < r3 < r2 < r1 | Backlinks | View wiki text | Edit wiki text | More topic actions

Topic revision: r3 - 19 Jul 2006, AndrewDolgert

Copyright © by the contributing authors. All material on this collaboration platform is the property of the contributing authors.

Copyright © by the contributing authors. All material on this collaboration platform is the property of the contributing authors. Ideas, requests, problems regarding CLASSE Wiki? Send feedback